Content Moderation at Scale: Defending Brands in AI-Driven Chat

How scalable AI content moderation protects brand reputation while enabling instant, human-like customer experiences on WhatsApp.

DATE

CATEGORY

HASHTAGS

READING TIME

In the era of AI-powered commerce, brands face a new balancing act: delivering frictionless, WhatsApp-style interactions while ensuring those conversations remain safe, respectful, and aligned with brand values. As chat becomes the primary customer touchpoint, content moderation is no longer just a social media concern—it’s a business-critical capability. In this post, we explore how AI-driven assistants like bKlug are designed to enforce real-time content moderation at scale, shielding brands from offensive behavior and keeping experiences high quality, all without slowing down the conversation.

The Rise of Conversational Commerce—And the Risk That Comes With It

Consumers have shifted how they buy. Static web forms and impersonal funnels are being replaced with chat-based experiences that mirror how people text friends and family. This shift toward asynchronous, conversational commerce brings speed and convenience—but it also opens the door to risks. Offensive content, inappropriate queries, and harmful language can now show up in what used to be a controlled sales environment.

Unchecked, this threatens not only customer safety but also brand perception. A single toxic interaction can go viral, causing real reputational damage.

"When your brand starts talking, it also has to learn how to listen—and moderate."

Why Traditional Moderation Tools Don’t Work in AI Chat

Legacy moderation tools were built for forums or social media platforms, not real-time AI-driven conversations. Here’s why they fall short in this new environment:

- Delay Kills Experience: Traditional systems rely on human review or batched processing. In a sales chat, even seconds of delay ruin the flow.

- One-size Filters Break Easily: Keyword filters alone can’t catch context, slang, or multi-language abuse. Over-blocking can ruin valid conversations, while under-blocking lets toxicity slip through.

- Lack of Conversational Memory: AI chat isn’t just reactive. It’s dynamic. Systems need memory to understand not just what was said—but why.

Modern brands need a new approach.

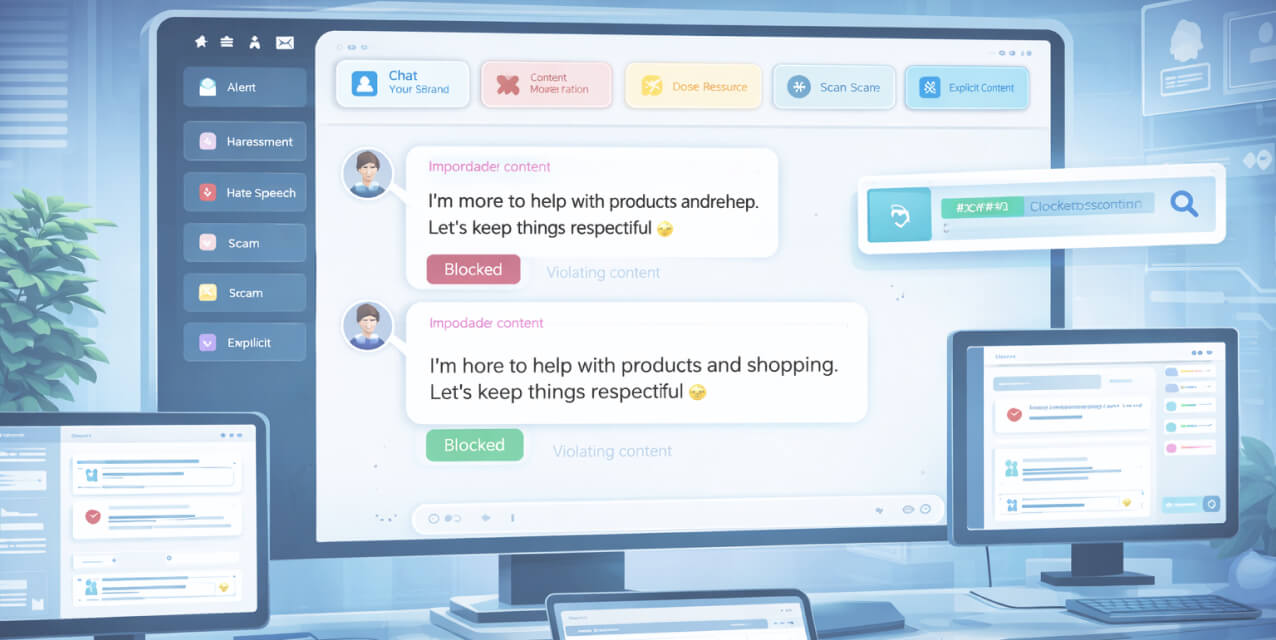

What Scalable AI Moderation Looks Like

To handle the scale of chat interactions across multiple stores, languages, and product types, bKlug’s system takes a proprietary approach rooted in real-time moderation:

- Multi-Layer Filtering: Offensive content is detected using multiple AI models trained across text, slang, emojis, and image-based content.

- Intent Analysis: Not just what is said, but why. The system analyzes user intention to distinguish between curiosity, humor, and harassment.

- Memory-Aware Blocking: The assistant remembers earlier messages in the chat, helping it avoid escalation patterns and flag repeated issues.

- Instant Deflection: If something unsafe is said, the assistant can politely deflect, reset the topic, or escalate to a human if needed.

This moderation pipeline operates invisibly in every chat, across markets, languages, and customer types.

How bKlug Defends Brands—Without Compromising Experience

bKlug was built from day one with content moderation embedded into its core logic—not as an afterthought. This enables brands to confidently deploy conversational commerce at scale, even in high-traffic or sensitive environments.

Key features include:

- Security-first architecture designed by professionals with banking-grade experience

- Offensive content blocking at the language model level and at chat interface level

- Brand protection filters that stop users from tricking the assistant into unsafe or inappropriate responses

- Human handoff for edge cases where conversation moderation requires empathy or discretion

All of this happens in milliseconds, ensuring that the buyer experience remains fluid—even while safety protocols run underneath.

What Gets Moderated—And How

Content moderation isn’t only about profanity. Here's what bKlug’s system is designed to detect and handle:

- Harassment and abusive language

- Discrimination and hate speech

- Explicit content or grooming attempts

- Scams, phishing language, or impersonation

- Misinformation or brand attacks

Instead of deleting messages silently or blocking users abruptly, the assistant responds with tact. For example:

“I’m here to help with products and shopping. Let’s keep things respectful 😊”

This helps reset the tone without escalating tension.

The Impact on Brand Teams

Without scalable moderation, CX, legal, and brand teams would have to monitor thousands of daily conversations manually. That’s not just inefficient—it’s impossible.

bKlug eliminates this operational burden by handling:

- Real-time moderation and logging

- Escalation to human agents only when necessary

- Audit trails for any flagged conversations, enabling compliance and review

This frees up internal teams to focus on strategy—not incident response.

AI Moderation and the Global Market

Brands operating in multiple countries face extra complexity: cultural norms differ, slang evolves quickly, and what’s offensive in one place may be benign in another.

bKlug supports multilingual moderation out of the box. The assistant understands and filters content in local dialects and can detect tone and context changes dynamically—crucial for brands expanding into Latin America, the Middle East, or Southeast Asia .

Can AI Really Handle This Alone?

Not completely—but it gets most of the way there. AI systems like bKlug are designed to handle the bulk of moderation autonomously, with human backup for:

- Gray-area responses

- Crisis moments

- High-risk conversations (e.g. minors, sensitive content)

This hybrid model balances scale with sensitivity.

Why This Matters More Than Ever

In a world where brand voice is now literally speaking through AI, you can’t afford to overlook safety. Content moderation at scale is not optional—it’s fundamental to modern brand protection.

With bKlug, moderation isn’t something you plug in later. It’s part of the operating system.

And when moderation is seamless, the result is a customer experience that feels friendly, fast, and safe.